Haptic Speech

Lead: Karon MacLean

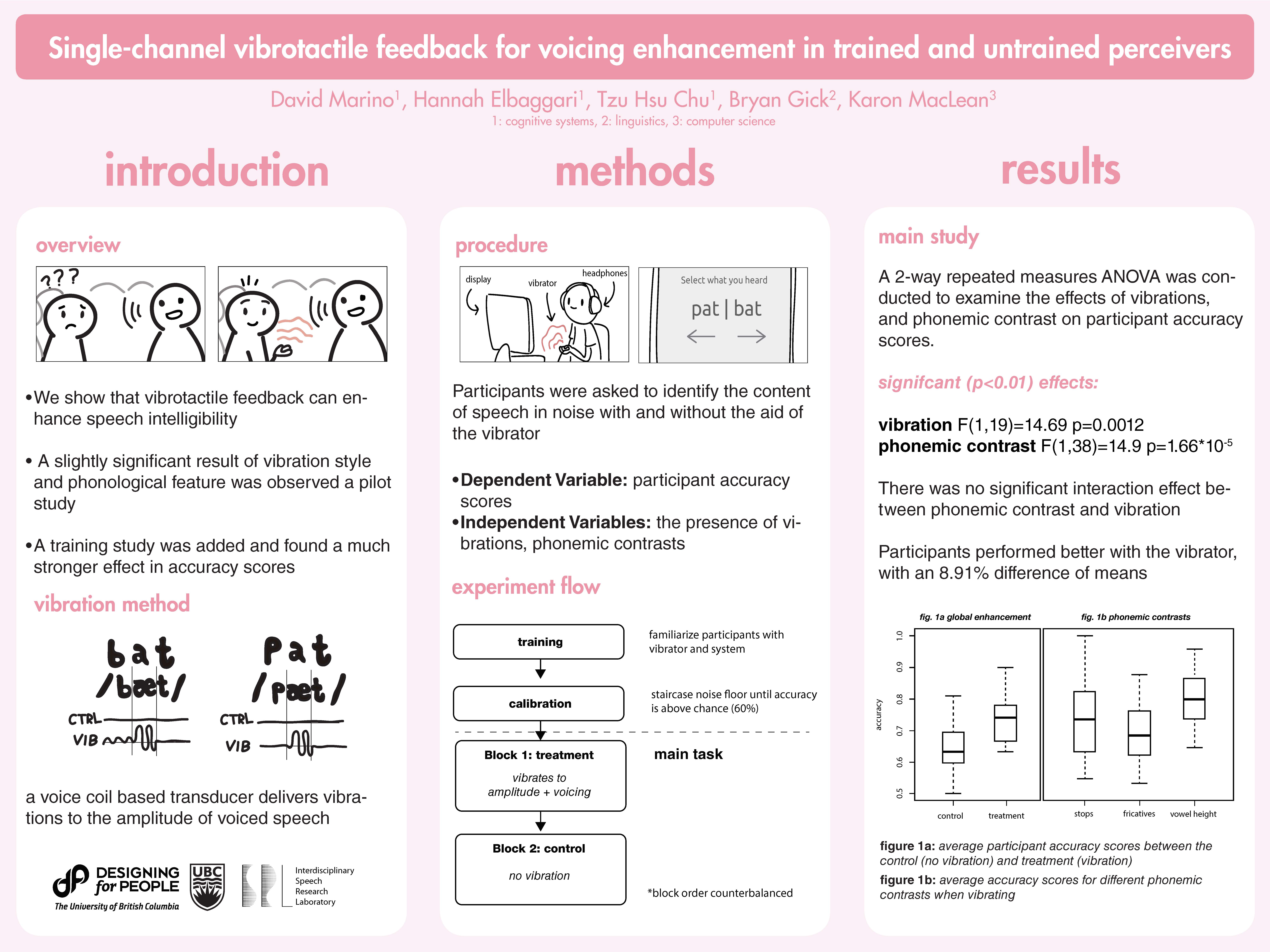

This translation project proposes the use of tactile stimuli to enhance intelligibility of speech in hearing-challenged situations (whether partially deaf or in a loud environment). Bryan Gick (Linguistics) adds his expertise in multimodal speech intelligibility enhancement to MacLean’s tactile communication experience.

This concept differs from past approaches by combining linguists’ knowledge of how the brain process specific linguistic elements, with the potential of fast processing and embedded tactile displays on modern handheld devices. This project emphasizes only key phonlogical features which are crucial to intelligibility, and which drop out in the presence of masking noise or low hearing ability. Beyond its potential effectiveness, this approach is exciting because it could be delivered with high accessibility – just by holding a device with a microphone and vibrotactile display in ones hand; no hearing aid required. Thus, we see this concept as one with high potential for commercial translation with relatively little further research investment.

The team recently completed a pilot study (which won a Best Poster prize at the DFP Design Showcase) to demonstrate real-time processing capability and delivery efficacy with a handheld device. The results of this study were very intriguing; despite there being a significant lag time between the acoustic and vibrotactile signal (caused by the time it takes to transform the acoustic signal to vibrotactile), participants still performed just as well as when there was no lag at all. This is good news in terms of implementing a real time system, but also leaves unanswered what exactly is going on from a cognitive systems perspective that even setting the delay to +300ms would not affect accuracy scores.

Why is the vibrotactile signal even enhancing their accuracy scores at all? Is it supplementing linguistic information in some way? Or maybe is it just an attentional aid—something that just guides the user’s attention to the relevant speech signal. These are the questions that the Haptics team seek to answer next.

Possible Translation Partners: Google Labs, Tactile Labs (Montreal), Beltone, the Canadian Hearing Society, and the Western Institute for the deaf and Hard of Hearing